Every year, Google I/O sets the tone for where digital technology is headed. For developers, engineers, startups, and anyone tracking the evolution of AI, it’s become a can’t-miss event. This year, the spotlight shifted to generative AI, user experience, and new opportunities across mobile and cloud platforms.

But Google didn’t just share updates — it reimagined how AI fits into products used by billions of people. While the company has its vision for the future, we’re entering a new phase of competition, innovation, and growing business demand.

In this article, we review the most important announcements from Google I/O 2025 and highlight the technologies that will shape tomorrow’s digital solutions—from generative AI to Android updates and a more assistant-like UX. These trends are already changing how teams build, scale, and design products, and developers need to be ready.

How Google Reinvented Search: Key AI Features from Google I/O 2025

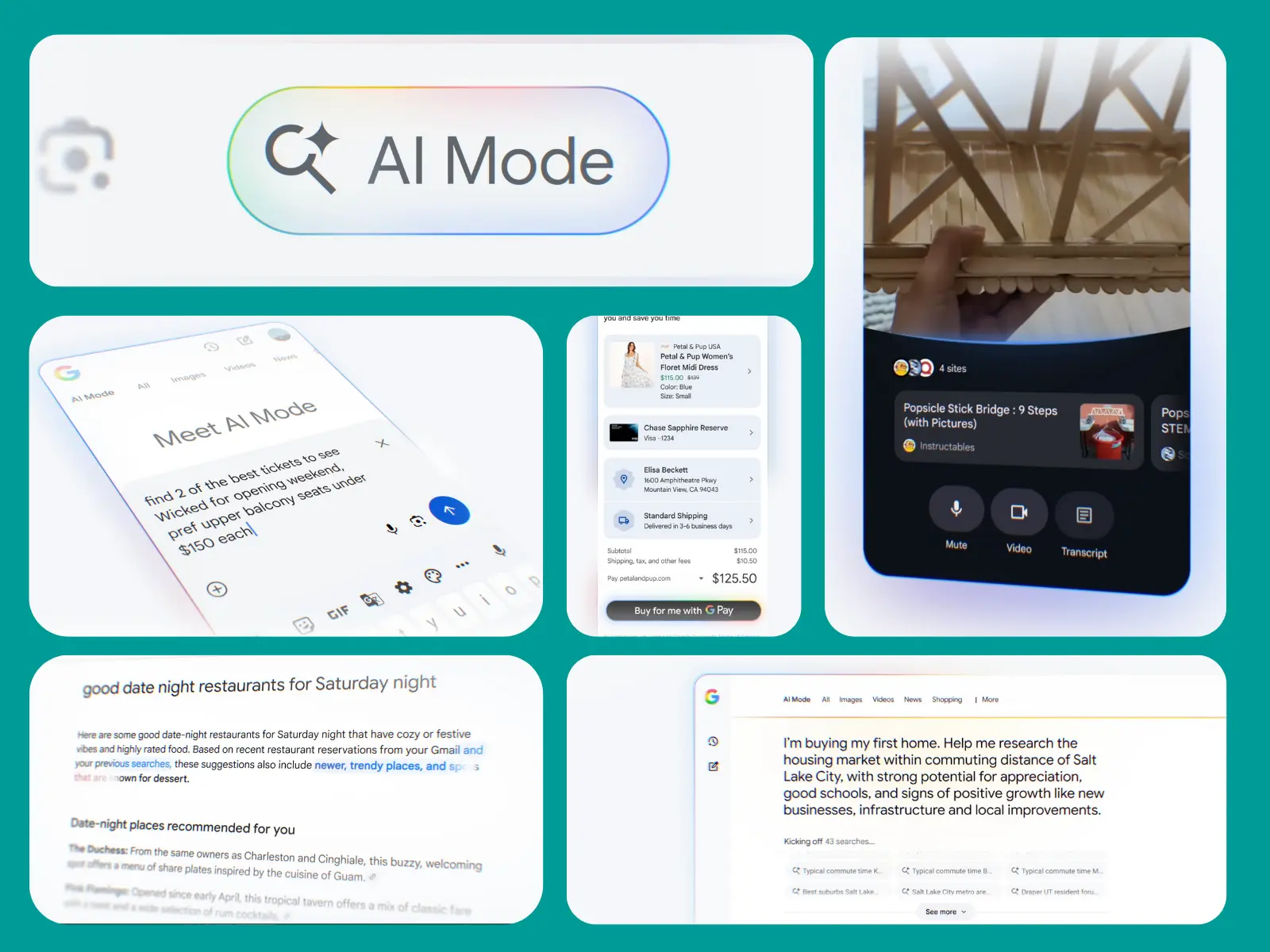

At Google I/O 2025, the company set an ambitious goal — to show how AI works in its products and redefine what digital assistance can be. One of the most significant shifts came in how we use Google Search. It’s no longer about typing keywords. It feels more like a real-time conversation with a system that can see, understand, and respond.

A new AI Mode is beginning to roll out in the U.S., and users can already test Deep Search through Search Labs. This version gives more thoughtful, detailed responses powered by advanced analytics. Features from the Astra project are also making their way into Search — turning it into something much closer to a smart assistant. You can speak into your camera, show an object, ask a question — and get a relevant answer as if you’re talking to an expert.

Google has also added agent-style capabilities: book tickets, reserve a table, or make a doctor’s appointment directly within Search. If you’re tracking a product, AI will notify you when the price drops. And if you’re analyzing data, the system can automatically generate charts — perfect for sports stats or financial reports.

These aren’t just cosmetic upgrades. Under the hood is Gemini 2.5, Google’s latest AI model. It now supports 1.5 billion users each month. In major markets like the U.S. and India, search engagement has already increased by more than 10%.

Gemini at Google I/O 2025: From Smart AI to Everyday Assistant

Gemini is no longer just an AI model—it’s becoming a complete ecosystem. At Google I/O 2025, the company showed how AI can move beyond intelligence and become truly useful in daily life.

The new version of Gemini is faster, more interactive, and more deeply integrated with everyday tools like Maps, Calendar, Tasks, and Keep. With Gemini Live, users can take action during a conversation — adding calendar events, checking locations, or managing to-dos without switching context.

And that’s just the beginning. Screen and camera sharing on iOS is now available, and a new Create menu in Canvas lets users generate infographics, podcasts, and quizzes in just a few clicks.

Research tools are getting smarter, too. The updated Deep Research feature allows users to upload PDFs and images, and Gmail and Google Drive integration is coming soon. For goal-driven workflows, Agent Mode will soon let users describe an outcome, and Gemini will figure out the steps.

Google is also emphasizing scale. With over 400 million active users monthly, Gemini isn’t just a productivity tool — it’s becoming part of everyday work for millions of teams and individuals.

Google Focuses on Safety, Transparency, and Smarter AI Thinking

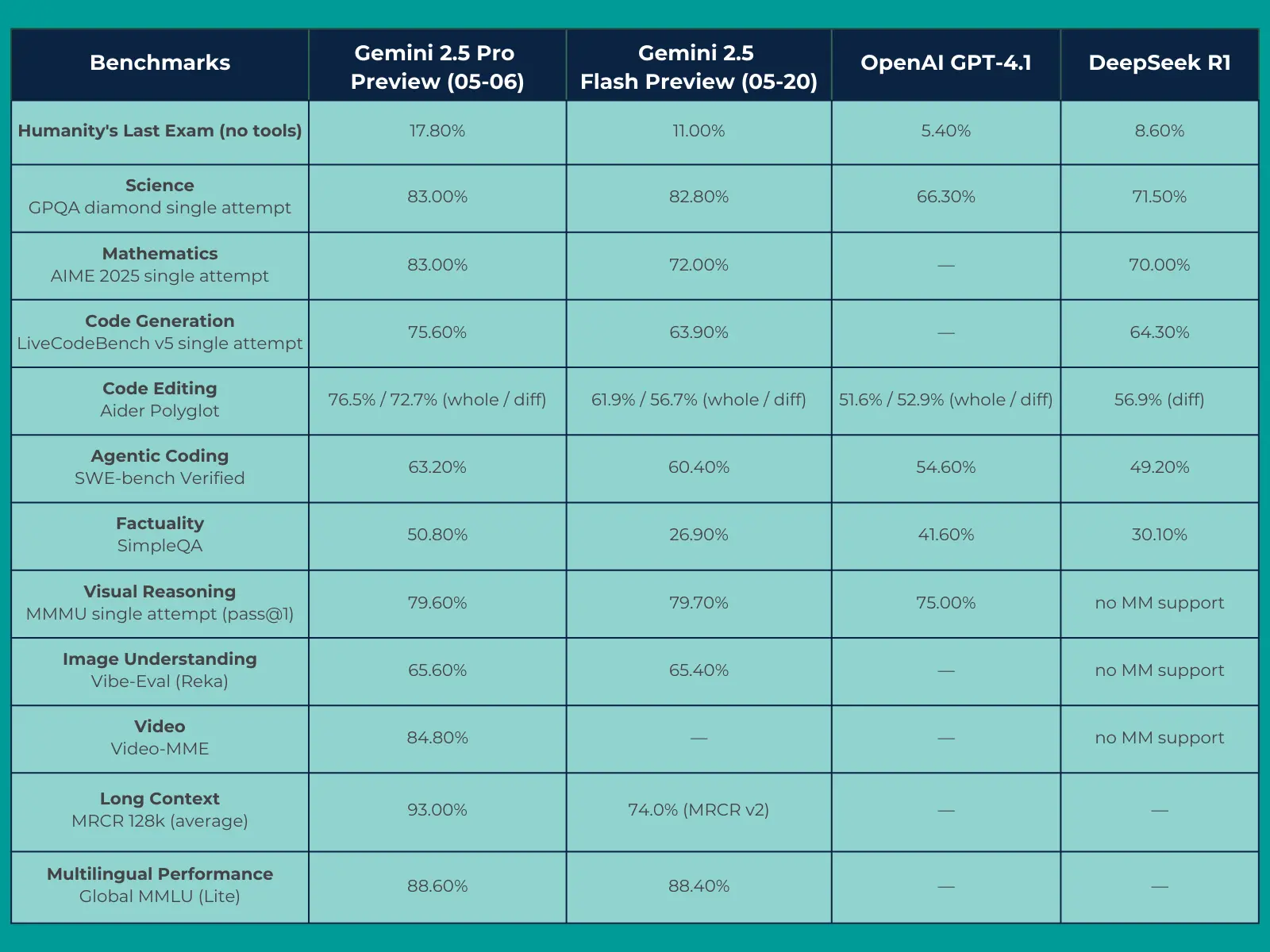

Many of the updates at Google I/O 2025 centered around the new Gemini 2.5 model. The upgraded Pro version is already topping key AI benchmarks like LMArena and WebDev Arena. It will be available for production use in Google AI Studio and Vertex AI in the coming weeks.

However, performance wasn’t the only focus. Gemini 2.5 also brings stronger security. A new protection system helps guard against prompt injection attacks, making connecting with external tools safer.

One of the most intriguing features is Deep Think, an experimental mode for complex programming and math tasks. It allows the model to “think” more deeply by running extra calculations, visualizing steps, and testing different approaches before giving an answer.

For developers, these updates unlock:

- More control and flexibility

- The ability to set a thinking budget — defining how many tokens to allocate to reasoning

- Tools to balance speed with depth of thought

Google also introduced Gemini 2.5 Flash, a faster, lighter model built for quick code generation and fast reasoning tasks. A smaller version—Flash Lite—is on the way for ultra-low-latency use cases.

To improve transparency, Google is rolling out thought summaries, which show how the model reached its answer, including which tools it used and what data it referenced. It’s a meaningful step toward explainable AI.

Finally, features from Project Mariner are now integrated into the Gemini API and Vertex AI. That means developers can automate actions, trigger workflows, and manage interfaces — not just ask questions and get answers.

Media Spotlight at Google I/O 2025: How Generative AI Is Changing Visual and Audio Content

One of the most visually impressive parts of Google I/O 2025 was generative AI in media. Google clarified that creating high-quality visual and audio content is no longer just for professionals. With the latest tools, anyone can do it.

Here’s what was introduced:

- Veo 3 — a video generation model that creates clips with voiceovers, camera movement, and in-scene object editing.

- Imagen 4 — Google’s most advanced image model to date. It delivers high-resolution visuals with detailed styling, clean typography, and support for multiple aesthetics.

- Together, Veo and Imagen offer a powerful toolset for creative production.

- Flow — a new platform for building animated films. Users define characters, styles, and scenes, while DeepMind powers the generation. The first project, ANCESTRA, is set to premiere at the Tribeca Film Festival.

- Lyria 2 and Lyria RealTime — next-gen music AI models that generate vocals (including choral arrangements), allow real-time sound control, and connect via API.

- SynthID Detector — a tool that identifies whether a media file was AI-generated. At launch, it includes a database of over 10 billion content items.

These tools are designed to help creators, marketers, and teams produce rich media faster and more intuitively — making professional-grade content more accessible than ever.

Project Astra at Google I/O 2025: An AI That Can See, Hear, and Remember

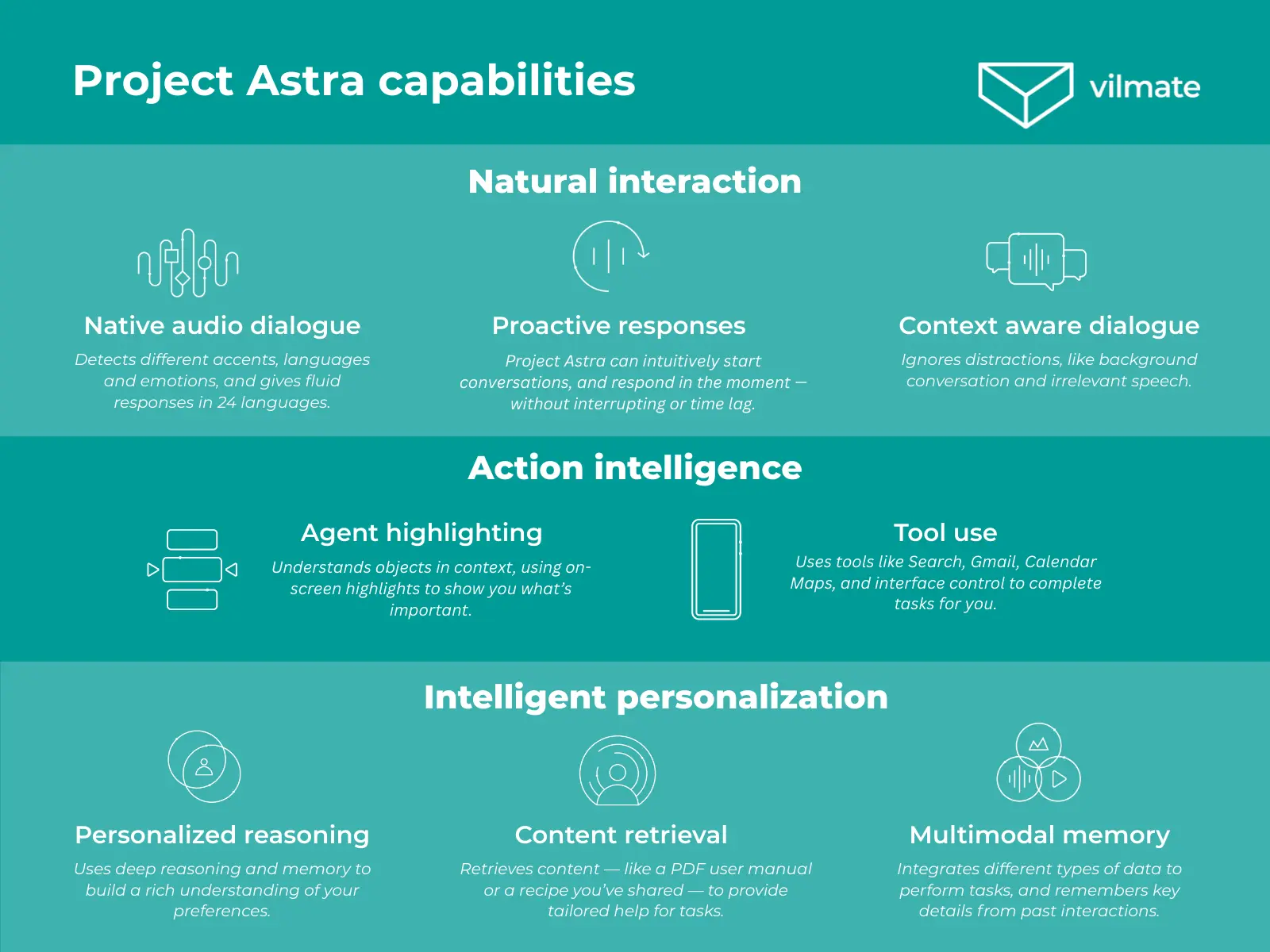

While generative AI is great for creativity, Project Astra focuses on real-world interaction. At Google I/O 2025, Google shared its vision of a next-generation assistant — one that can see, listen, remember, and respond in real time.

This is no longer a concept. Astra can watch through a camera, understand the environment, and react instantly. It can solve complex problems, explain them visually, and act like a study partner. Or it can simply be present with you — recognizing what you see, responding to gestures and voice commands, and assisting contextually.

These capabilities are already being integrated into Gemini Live and other Google products, and there’s more to come. At I/O, Google revealed the first Android XR glasses, created in partnership with Gentle Monster and Warby Parker. These smart glasses can recognize objects, translate speech in real time, take photos, and send messages — all while looking like regular eyewear.

Another exciting announcement was Google’s partnership with Aira, a service that supports people who are blind or have low vision. With Astra, Google is building assistants that help users navigate the world, complete daily tasks, and interact with digital tools — all in real time and with environmental awareness.

Google also introduced Project Moohan, a new XR headset developed with Samsung. It’s designed to deliver immersive experiences for communication, navigation, presentations, and media. The message was clear: XR is no longer experimental — it’s now a real platform, with developer tools arriving later this year.

New Developer Tools at Google I/O 2025

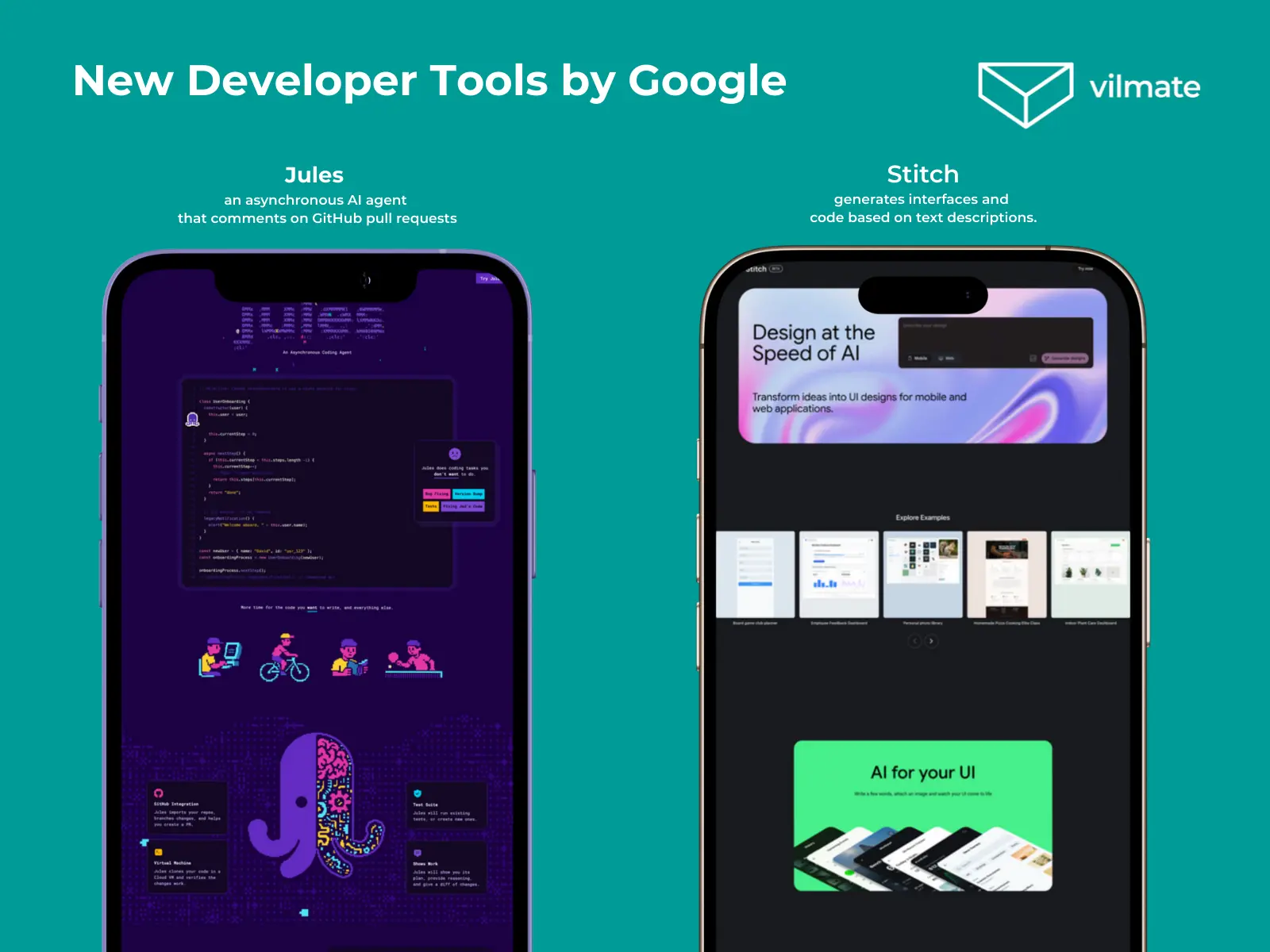

Developers have always been a key focus at Google I/O — and 2025 was no exception. But this year, the company went beyond the usual APIs and SDKs. Tools that once felt complex and disconnected are now part of a more unified workflow — from writing code to designing interfaces, from testing to automation.

One of the most significant announcements was Gemini Code Assist. It’s not just a coding helper — it behaves like a collaborative engineer. It understands your codebase, helps you write and refactor, and even suggests UI improvements. You can use it as a standalone tool or as a GitHub extension. Since it’s powered by Gemini 2.5, it doesn’t just generate code — it understands structure, context, and logic. It also remembers past sessions, so you can easily pick up where you left off.

Other tools announced at I/O include:

- Jules — an asynchronous AI agent that comments on GitHub pull requests

- Android Studio Journeys — lets you describe tests in plain English

- Firebase Studio & AI Logic — make it easier to build AI-first applications

- Stitch — generates interfaces and code based on text descriptions

Another major update was the launch of three new lightweight models: Gemma, SignGemma, and MedGemma. These are open-source and purpose-built formultimodal generation, sign language translation, and medical image analysis. With this, Google is opening up critical domains to the developer community for research and real-world impact.

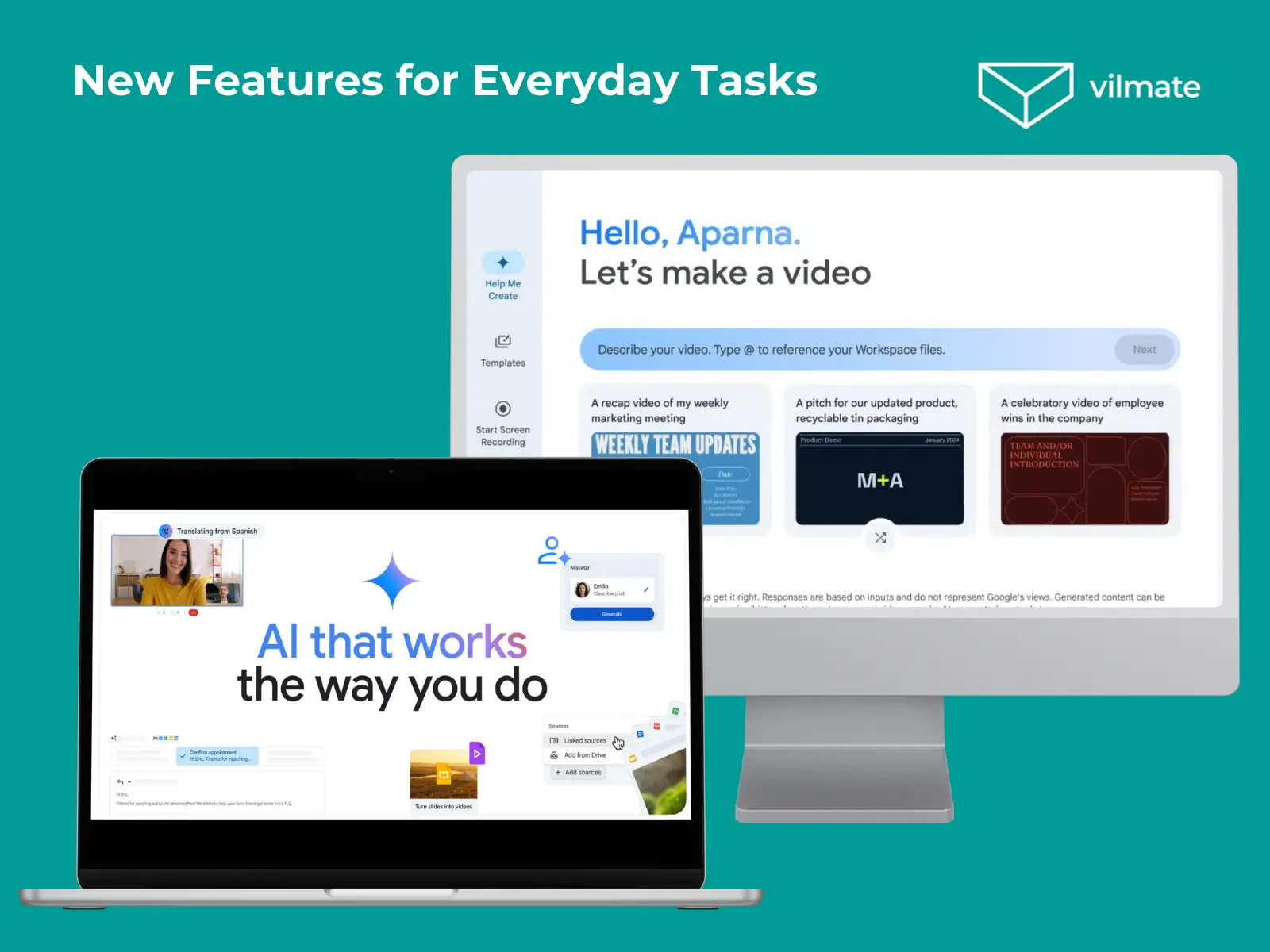

AI in Gmail, Meet, and Google Vids: How AI Is Helping in Everyday Tasks

Google’s most familiar tools are getting a significant intelligence boost. Gmail, Meet, and Docs — the services millions use daily — are now more personalized and AI-powered than ever.

Key updates from Google I/O 2025 include:

- Gmail now offers smart replies that reflect your writing style, understand the conversation’s context, and pull in relevant info from your notes and documents.

- Google Meet adds near real-time translation while preserving tone and meaning — a massive upgrade for global teams.

- Google Vids is a new video generator powered by Gemini. It creates visuals, explains ideas, and builds marketing content — fast and intuitively.

That’s not all. NotebookLM now supports audio summaries and video overviews, turning dry documents into engaging visual formats. And a new experimental tool, Sparkify, can transform complex topics into short, easy-to-follow educational clips.

What Google I/O 2025 Means for Businesses and Developers

So, what does all this mean for product teams and business leaders?

It’s time to rethink priorities.

Google’s message is clear: the old model of static interfaces and single-purpose tools is fading. Users expect personalization, conversational interfaces, instant results, and smarter support. AI is no longer a feature — it’s the foundation of modern UX.

If you’re a developer, here’s where to focus:

- Gemini API and Live API — for real-time content and voice-based interaction

- Deep Research and Canvas — for generating rich, adaptive content

- Imagen and Veo — for creative workflows, onboarding, and training

- Code Assist and Jules — for smarter, faster software development

And for digital product owners?

The message is even simpler: old UX won’t cut it anymore. Today’s tools must anticipate user needs, offer suggestions, and reduce friction. That means moving beyond patchwork updates — and toward a long-term tech strategy.

At Vilmate, we help companies do exactly that. From e-commerce and media to healthcare and education, our teams deliver scalable solutions that turn AI from a buzzword into real value.

AI isn’t optional anymore — it’s the standard. The sooner you lean into it, the easier it will be to stay ahead.

Google I/O 2025 didn’t just show us what’s coming. It showed us that the future is already here.

So the question is — will you follow it or help shape it?

If you’re ready to shape it, Vilmate is here to help.