Intelligence at the Edge: How ML is Driving Edge Computing

artificial intelligenceedge computingmachine learningtechnologyWhen machine learning and edge computing work together, this union is bound to be considered a success in terms of addressing a problem of detection, classification, and prediction of future events. It is possible on the basis that the collected data is handled efficiently. AI-enabled devices and intelligence at the edge of local networks are key factors in driving this efficiency.

Machine learning at the edge use cases

Edge analytics is an approach that allows collecting and analyzing data in the same physical environment that is, at the same time, a data source (be it sensors, devices, or various touch points.) Thus, it appears to be an alternative to waiting for the data to return after being sent to the cloud or on-premise server.

In order to exploit input information, one should consider applying edge analytics. Most machine data generated by medical equipment, industrial machinery, and extreme environments, to name but a few, never reaches the cloud. It does not happen even if they have tons of sensors to collect the data. We will try to explain why it is so a bit later in this article. First, we would like to draw some examples of edge use cases.

#1 Toyota Connected leveraging Amazon Web Services

Toyota is a company that prefers keeping pace with tech advancements. Taking advantage of AWS, they now use video-based tools to perform analytics at the edge, which means that connected vehicle data is received from the sensors. Then, it is used to either build data services for the customers to drive their satisfaction and offer them new car safety and convenience features or take data and use it to improve the products as such. Proving this point, we are ready to give a couple of concrete instances of areas of improvement:

- Detecting and alerting drivers about tornadoes or other dangerous weather conditions. The power of ML and the edge allow doing that faster and more quickly than traditional means.

- Keeping the environment clean. The intelligent edge helps to discover the presence of bunches of trash people are driving by and let competent authorities know about it in no time.

- Reducing graffiti. These are detected in the same way as garbage is.

- Improving maps in real time, optimizing public transportation routes and emergency services. A combination of machine learning and edge computing enables an automatic performance of these operations.

- Improving safety outside and inside the car.

We would like to put a special emphasis on the last point. Since transferring all the data to the cloud is impossible, the solution must be searched for in another place, This place is the edge. Toyota’s edge is the vehicle itself and putting the hardware in it allows us to boost safety through the use of computing. It is possible to predict driver distraction with DeepLens, which is an ML technique and tell normal driving from such types of distraction as being on the phone, texting, looking away or reaching behind.

Generally speaking, it is AWS that cover the entire ML workflow for Toyota Connected.

An end-to-end pipeline for their model works in the following way:

- A DeepLens is taking images in real time while you are driving.

- It is sending images into an S3 bucket.

- The Lambda function, which is configured on the device itself, loads your ML model for you to have your model trained in the cloud, in SageMaker.

- The model artifacts are JSON files, so you deploy them to the device and GreenGrass provides a convenient environment for deployment of your models.

Or, to put it another way, you 1) train a model in Amazon SageMaker, 2) write an AWS Lambda function, and 3) deploy Lambda code using Greengrass.

Once a model and a Lambda function are deployed to your device, Lambda starts capturing data from whatever sensors are available on a device and it uses the model to perform inference ready to run some predictions locally on the device without any communication back to the cloud. Then, if you like to and have network connectivity, you can push back some results — some aggregated data on predicted data back to AWS for further analytics.

Although our pipeline is nearly universal, how exactly your model will work depends heavily on what your business use case is.

#2 Crosser learning on the edge use cases

As it became clear from the previous point, the edge implies streaming analytics. Data processing starts while it is coming off the sensors, while it is still in motion. Consequently, processing is being performed close to the source of that data and the result is delivered in no time wherever it is needed.

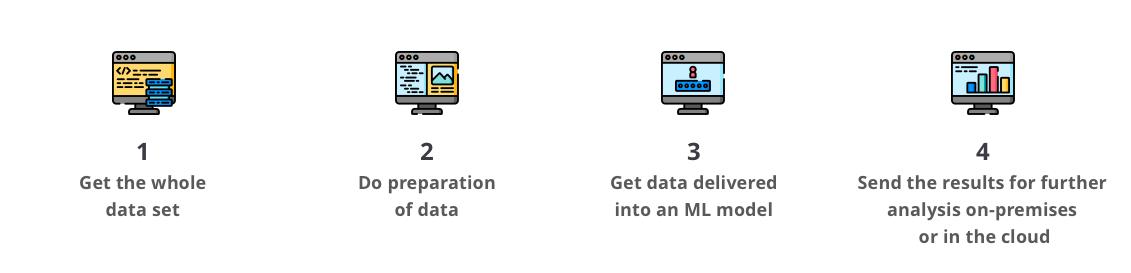

Crosser is, basically, of the same opinion as Amazon for they believe the main machine learning use case in edge devices is anomaly detection. It requires machine-to-machine processing, the results of which are needed locally, so it is reasonable enough to do processing locally, too. Yet, comes with some requirements on the environment where you run ML models. These requirements can be clarified by presenting the stages of the high-level streaming Machine Learning pipeline, as it is understood by Crosser:

- You need to get the whole data set and capture the data you want to use.

- You do preparation of data before it can be used in an ML model. Since the model cannot use raw data, you might need to extract some extra features, provide variances, and align data.

- When the data is prepared, it is delivered into an ML model. There are typically different types of ML frameworks run to host that model.

- Results are delivered for you to be able to send them for further analysis to wherever they are needed — either on-premises or in the cloud.

Accordingly, as is with AWS, normal behavior is learned, so that the expected results can be compared with actual values and anomalies can be detected through technologies built into an ML model.

Crosser has its own Edge Streaming Analytics Solution, whose completed outline looks like this:

- Crosser Cloud that is responsible for orchestrating and managing data and comprising:

- multi-tenant cloud platform

- Flow Studio (a visual design tool)

- Edge Director (a tool for nodes orchestration)

- Monitoring Dashboard

- systems for data management and control

- Crosser Nodes that help process data in the edge to take actions locally using one’s own ML model.

- Nodes are deployed in a Docker Container.

- The high performance of over 100.000 messages per second in a small footprint.

- In-memory processing that results in single digit/millisecond latency.

- Nodes support different ML frameworks, so you can bring your own AI.

Thus, by choosing Crosser, one may not only derive the above benefits but also simplify the implementation of the edge and minimize the need for software developers; dramatically reduce the time of the journey leading from an idea up to its deployment; cut down life-cycle costs replacing hard-to-read code with easily understood flows; and involve non-technical staff in the process.

Though Crosser is at the demo stage, deploying an Edge Node in Crosser Cloud can already now offer the flexibility to integrate anything, any cloud or IoT platform, AWS inclusively.

Benefits of using machine learning at the edge

Now that we know how machine intelligence and edge computing technologies can possibly be combined, it is time to discuss the benefits of this combination.

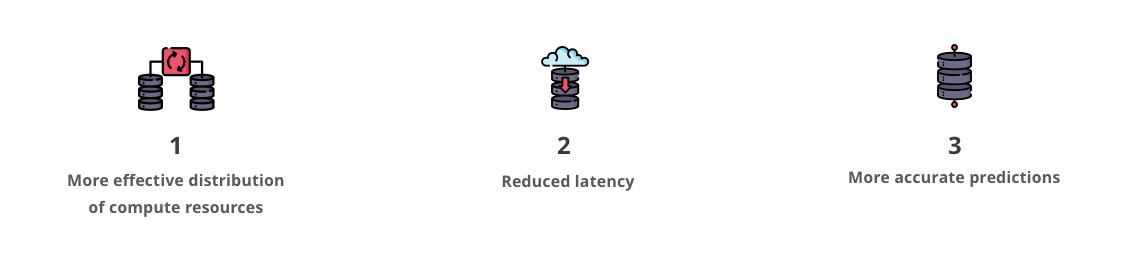

1. More effective distribution of compute resources.

In the offline world, data is typically sent from the sensors in parallel to be nicely aligned right afterwards. In the edge environment, you get your data serially from the sensors. Each of them senses the data independently of all the other series and you don’t know exactly in which order they will come. This causes an alignment problem.

Involving the ML model is the decision for you to make. The model will align all the sensor values on regular time frames so that they were received at the same periods. Knowing a minimum period of time we want to align on and that may be required for a message to arrive from a sensor, you can find a way out of the situation. Yet, that can be a tricky operation since we don’t know anything about the arrival time of messages coming from the sensors by either repeating or discarding values coming from sensors.

2. Reduced latency.

When it comes to computing, latency is often an issue. If the ability of your mobile assets to communicate the data outside of that asset is limited, then you should consider processing in the machine itself. In order to do so, you should apply advanced algorithms at the edge on that machine to be empowered to analyze all the data available and then deliver its small subsets.

Upon reducing the amount of data, you can receive triggers when anomalies occur instead of being sent all the raw data. That could go for other use cases as well. So, you can use ML as a way of reducing the amount of data or extracting higher-valued features. Besides, it makes sense when you need the result of this processing locally or when you need to reduce the amount of data that you need to send to the cloud.

3. More accurate predictions.

ML workflow establishes the best practice for increasing the accuracy of predictions. You use your data in order to train your model, which is an iterative process where you develop the model and then, eventually, you come up with the solution that you feel is good enough. After that, you export the relevant data into some format and make it available so that it can be executed in the edge environment. Besides, the model is updated over time. The reason is that you get access to more data and need to get a more accurate model, which requires further training, or because you are not completely happy with the result and want to get better performance.

Disadvantages of the intelligent edge

To turn weaknesses into strengths, you should first find out these shortcomings. Sure enough, using machine learning at the edge is challenging, as is beneficial. Let us focus for now on what flaws one may encounter:

1. Complex network structure.

We have already addressed this matter earlier in the article. A centralized cloud is a simpler architecture than a distributed edge environment. Numerous heterogeneous network components, not necessarily all of which are provided by the same distributor, are interconnected for ML and the edge to work properly and bring the expected results.

2. Security and privacy issues for edge computing.

Applying advanced security mechanisms is essential to securing transferred data, some of which may be sensitive. It is, in the first place, technically challenging Edge computing security is a real point of concern that that must not be neglected.

3. Costly edge hardware and maintenance costs.

The fact that data processing is done close to the source is not only one of the main advantages. At the same time, it makes an intelligent edge a more costly solution. Distributed systems, unlike centralized cloud architectures, require a rather big amount of local hardware. Consequently, costs are running up, too. Thus, a decentralized edge system with several computing nodes needs more thorough maintenance and administration costs.

Best practices and key factors to consider

When running ML models at the edge, it is important for you to remember the following:

- Most ML models need corresponding ML frameworks to run (ScikitLearn, TensorFlow, PyTorch.)

- There are new exchange formats, so ecosystems like ONNX have been created for you to switch easily between runtime environments.

- When selecting edge hardware for running your ML model, you need to consider the requirements of every particular model. The rule is that a configuration of a model must go with a hardware configuration for, sometimes, more processing power is needed.

- ML models may be very resource hungry, consider it when selecting edge hardware platform.

- Many popular machine learning libraries depend on low-level ones that are available for Intel architectures only, e.g. NumPy.

Conclusion

Artificial intelligence is expected to underpin ever more business models improving their efficiency by providing its predictive algorithms. Machine Learning at the Edge, however, drives even more intelligent solutions. Together, they are creating the architecture of the next-generation networks. Having reviewed some current use cases of ML-Edge combination, we dare to say that the prediction accuracy, which is already high enough, is going to grow and the range of what can be predicted is going to expand. Stay tuned for the industry is sure to offer you the updates in these technologies and further advancements.

© 2019, Vilmate LLC